Here, you can find reflections from our lecturer Dr Jovan Kurbalija on his style of AI conversation. This interview is conducted in the format of a Socratic inquiry.

Why do you use AI platforms?

AI is my ‘intellectual partner’ with whom I can bounce ideas back and forth and discover new angles and aspects.

Is AI better than humans?

It is different. It is available at any time. Can I call my friends to discuss an idea in the middle of the night, while I am on the bus, or just when I am out of the shower (space for many creative inspirations)? AI is good enough in mimicking human knowledge while being ‘easy-going’ and simple to interact with.

You have not answered my question…

I have answered it quite well. But maybe not how you expected.. Your question puts things into false dichotomies. Why does everything have to be better or worse than something else? Could it be just different? A bit of conversation with AI could also help us change our thinking which is often logically rigid. Such thinking won’t help as life is based on probabilities and heuristic.

What does it mean to have a conversation with AI?

It mimics conversation with humans. Knowledge is about conversation. As we discuss, we get new insights and ideas. AI helps us not to ‘rediscover America’, as it can tell us that many things we consider unique have been already thought of by others. But AI helps us build on the ‘shoulders of giants’ of the past, as it can memorise more than humans.

What is most important in your conversation with AI?

Questions are critical. In AI, questions are the prompts we discuss today. I would argue that answers are even less important than questions in the process of knowledge creation with AI. As Albert Einstein put it: ‘The important thing is not to stop questioning. Curiosity has its reason for existing.’ Questions are fundamental to the advancement of knowledge. But it could be the subject for another conversation on questions and, I guess, Socratic inquiry.

You are getting too theoretical.

It is true. But what is wrong with being theoretical? Good theories are very practical. We dismiss theoretical thinking so easily these days. Even the word ‘philosophy’ starts getting negative connotations. But, I got your point. I will be practical in my explanations.

Please give us some explanation. How do you use AI tools today?

Certainly. I will explain AI prompting around the subject of AI governance. This topic came to my focus as I got worried about the current hype and confusion around AI governance and regulation. The more the topic is discussed in media and diplomacy, the less it is understood. It is a sort of ‘knowledge inflation’. Like the inflation of money, the more you have the same currencies, the less relevant they become.

Thus, I asked myself a simple question: ‘Do we need AI regulations?’. It is a counterfactual question – on the level of policy heresy – as media, politicians and academics are deeply convinced that AI must be regulated. It is portrayed as a certainty pretty much like the sun rising in the morning. I also risk annoying many people who write books and negotiate about AI governance.

Why would you do it?

As Einstein said, we have to question even if we will annoy others. It is how we advance human knowledge and inquiry. Would you prefer to get articles and books on ‘AI governance’ as the given mantra or have the capacity to read these texts with a critical mind?

Ok. It is interesting. But you are sliding away from my question about the usefulness of AI prompting. Show us what you are trying to say here.

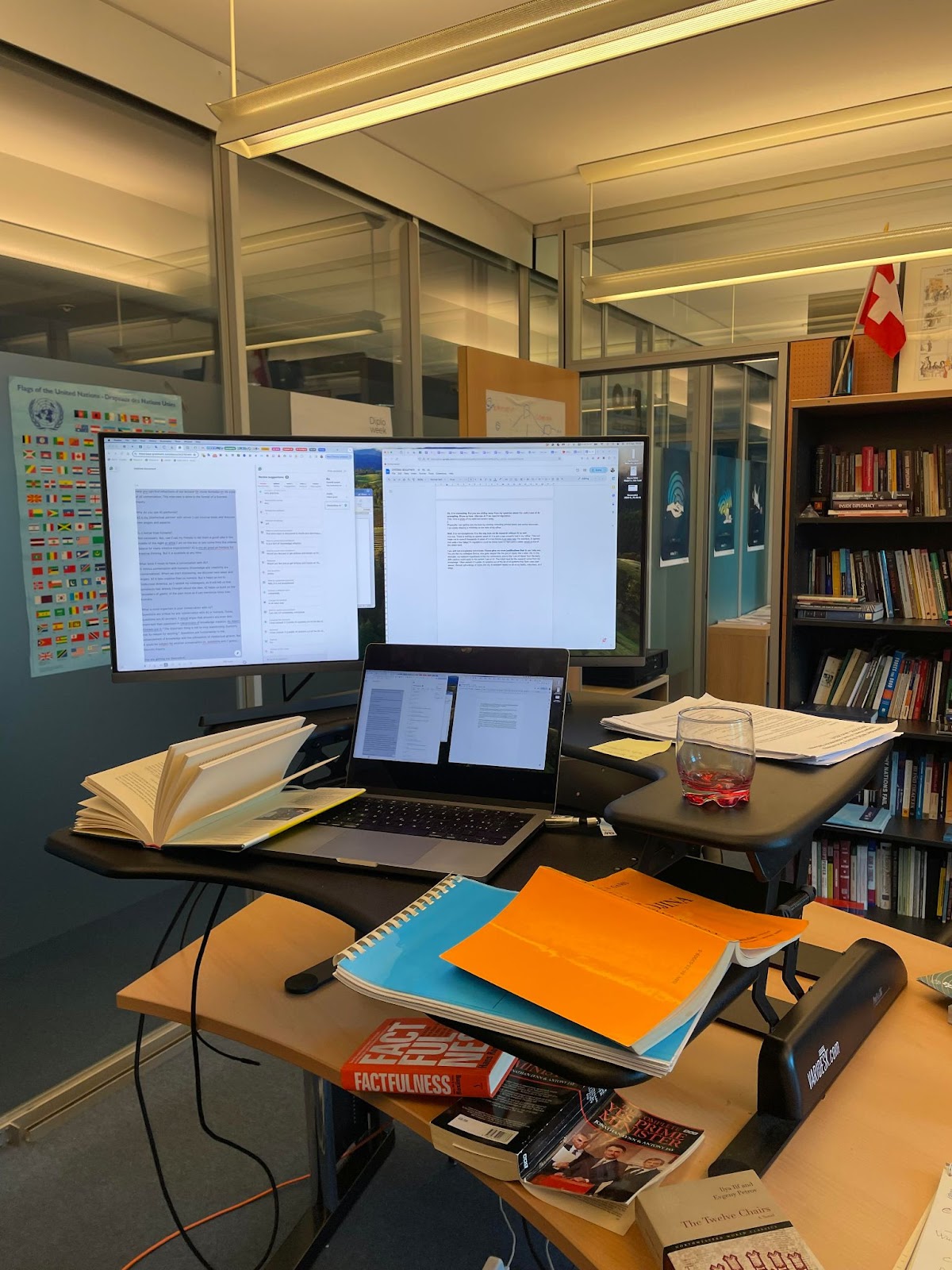

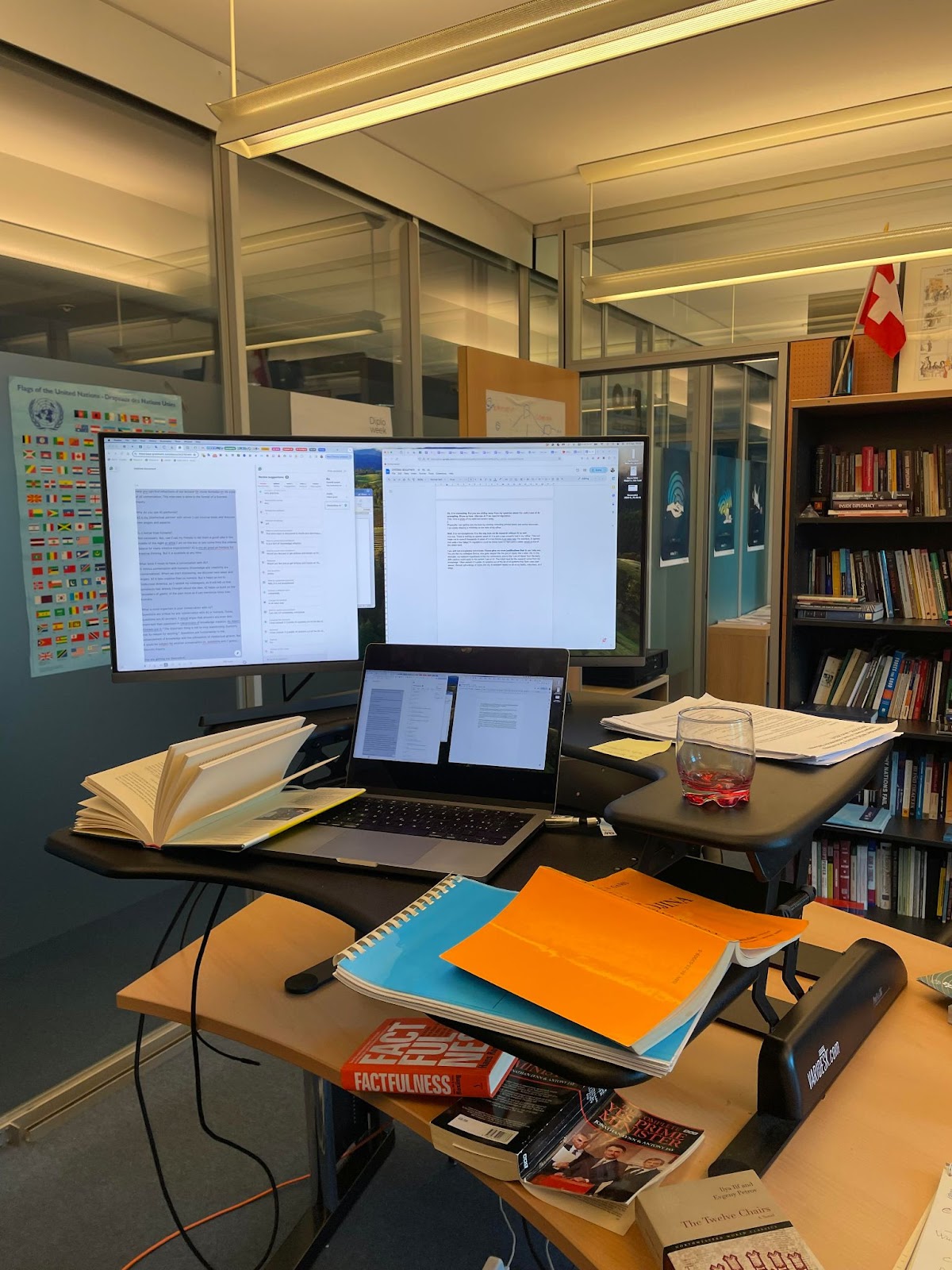

First, the question of enquiry is: Do we need to regulate AI? I took books and articles from my library and opened a few documents. One useful tool for AI prompting is the use of wide screens when you can open many documents and windows. Here is how my desk looks today….

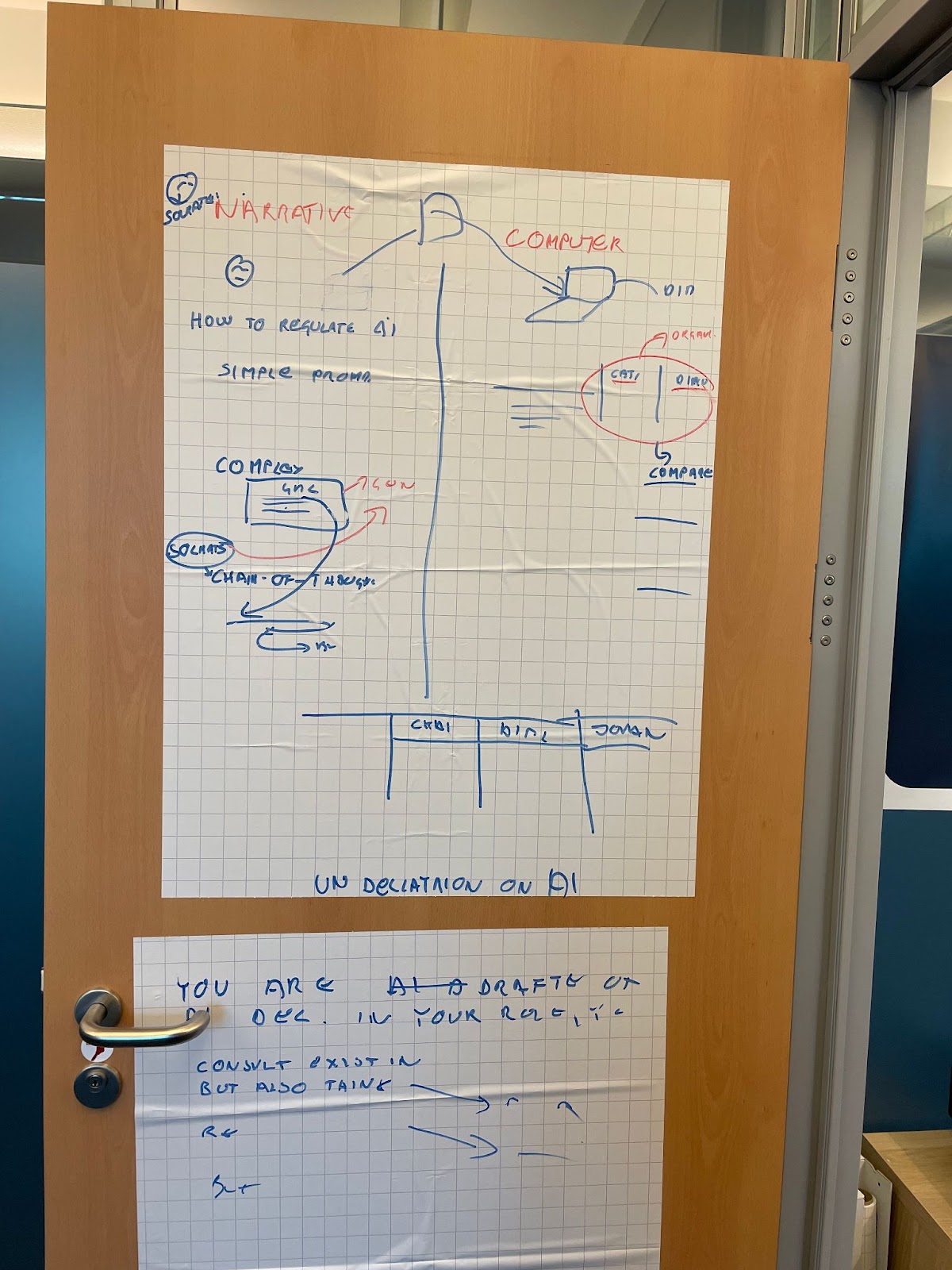

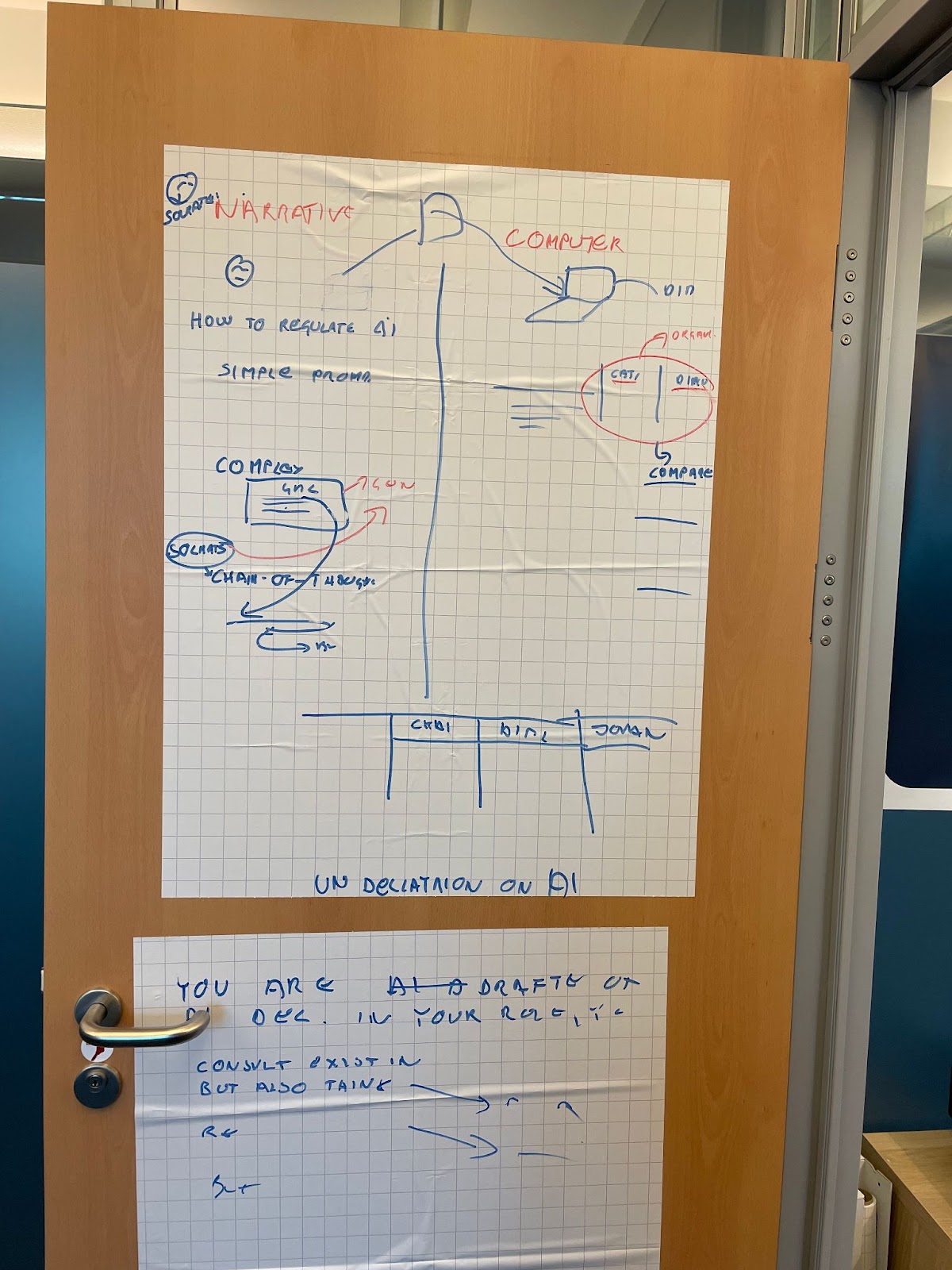

As I dive deeper into the topic I also draw a mindmap on the door….

Well, it is nothing exceptional. It is how we do research without AI as well.

It is true. There is nothing so special about AI. It is just a new powerful tool in my ‘office’. This tool helps me to consult thousands of years of human history in an easy way. For example, AI agrees that quite a few of the ‘latest’ AI regulations could be traced back to Hamuarbi’s codex, one of the oldest laws.

I am still not completely convinced. Please give me more justifications for why AI can help you.

You are like my colleague Sorina, who goes deeper into the gist of an inquiry like a laser. Ok. In this example, my research hypothesis is that the controversy around the ‘Law of Horse’ from the early 20th century could apply to the current ‘Law of AI’. The initial input for the research came from my knowledge. I then consulted 2 to 3 publicly available AI systems from the list of all systems that Diplo uses (see above), DiploAI (all writings of Diplo), and my AI assistant based on all of my books, interviews, and blogs.

Case Study: Do we need to regulate AI?

I interacted with the following platforms.

Content and issue analysis: all Diplo resources, blogs and other materials;

Text drafting by ChatGPT 3.5

Content and issue analysis: blogs, books, and articles written by me; Text generation by Text drafting by Claude-3-Sonet

Content and issue analysis: AI Course materials and interaction

Text drafting by Mistral Claude-3-Sonet

- ChatGPT 4.0 (you need to register)

Content and issue analysis: Not available indication about source materials

I asked two questions. You can continue with more questions and compare different answers. We will collect anonymised questions and answers from three Diplo systems:

You can notice ‘bias’ in my answers (Ask Jovan) as I am sceptical about the need to regulate AI. According to my opinion, existing laws can regulate the majority of AI-related issues. Introducing new regulation should be exceptional only if existing law cannot address specific AI-triggered problems. AI recognised my bias and reflected in my answers.

Question asked: Do we need to regulate AI?

Answers by 4 systems:

| Diplomacy.edu ChatGPT 3.5 | Based on the information provided, it seems that there is a general consensus that some form of regulation or governance framework is needed for artificial intelligence (AI), though opinions differ on the specifics. Here are some key points about regulating AI from the context: Existing laws and regulations may not be sufficient to address all the challenges and risks posed by advanced AI systems, such as issues around safety, privacy, ethics, accountability and liability. However, most sources suggest adapting and updating current regulations rather than creating entirely new, sector-wide AI regulations at this stage, in order to avoid stifling innovation. There are calls for developing guiding ethical principles, standards and best practices for the design and use of AI systems to ensure they align with human values like fairness, transparency and accountability. Establishing bodies or commissions dedicated to advising governments on AI policies, fostering public dialogue, and providing expertise is recommended in several reports. International cooperation and harmonization of technical standards and regulatory approaches to AI is encouraged to avoid fragmentation. Areas highlighted as needing regulatory attention include privacy/data protection, intellectual property rights, safety of autonomous systems like self-driving cars, and preventing bias/discrimination in AI decision-making. So in summary, while outright bans are not recommended, there seems to be an emerging consensus that some form of governance framework, whether through updating existing laws or new AI-specific regulations, is needed to manage the risks of AI in an innovation-friendly way. But the optimal approach is still being debated. |

| Ask Jovan | Based on the context provided, it seems that some level of regulation for artificial intelligence (AI) is needed, but the approach should be balanced and focus on addressing specific risks rather than overly broad restrictions. Here are a few key points on AI regulation from the context: There are different types of AI risks that need to be addressed through regulation – short-term known risks like job losses, data protection, etc.; mid-term risks like AI monopolies; and long-term existential risks. Regulation should tackle all these risks comprehensively. Most of the current debate and policy focus has been on the long-term, existential AI risks, while the short and medium-term risks have received less attention so far. A balanced approach looking at all risk timeframes is needed. The aspects that could be regulated include computation (hardware access), data (inputs to AI), algorithms, and AI system outputs/uses. Most of the current discussion centers on regulating the algorithms themselves. Regulating AI algorithms and trying to control the technology itself would be a major departure from the traditional approach of regulating digital technologies based on their uses and outputs rather than the underlying technical functioning. There are calls for more transparency from AI companies on their use of data and intellectual property in developing AI models. So in summary, some level of AI regulation seems necessary to mitigate the various risks, but it should be balanced, risk-based, and focused more on transparency and responsible development and use of AI rather than direct control over the algorithms themselves. An overly restrictive approach could stifle beneficial AI progress. |

| AI Governance Assistant | Yes, artificial intelligence (AI) should be regulated due to the potential opportunities and risks it presents, many of which have yet to materialize. The regulation of AI will be shaped by the investment of large transnational companies and the collaboration of different actors to address common concerns such as AI safety, security, and code transparency. Many countries, including Japan, are moving towards introducing hard law frameworks targeted specifically at AI. Regulation can take horizontal or sectoral forms, with the EU AI Act being an example of a horizontal regulatory approach that introduces a risk-based approach for AI systems. The Act bans exceptional-risk AI systems and strictly regulates high-risk systems, such as those used in performing surgeries. It also requires providers of general-purpose AI models with systemic risk to perform model evaluation. However, there are limitations and challenges in conducting evaluation, such as the lack of common evaluation methodologies and concerns about reliance on self-evaluation by AI companies. |

| ChatGPT 4.0 (professional) | The question of whether AI needs to be regulated is both timely and complex, reflecting a growing consensus among experts, policymakers, and industry leaders about the need for oversight to ensure AI technologies are developed and deployed in a way that is safe, ethical, and beneficial to society. Here are several reasons supporting the need for AI regulation: ### Ethical Considerations: AI systems can make decisions that significantly impact human lives, such as in healthcare, criminal justice, and employment. Without regulation, there’s a risk these systems might act in ways that are discriminatory, biased, or unethical. ### Privacy Concerns: AI technologies, especially those involving big data and facial recognition, raise substantial privacy concerns. Regulation can ensure that the development and use of AI respects individual privacy rights and data protection laws. ### Security Risks: AI systems can be vulnerable to attacks that manipulate their behavior in harmful ways. Regulations can set standards for AI security to protect against such vulnerabilities. ### Economic Impacts: AI has the potential to disrupt job markets and economies on a large scale. Regulations could help manage these impacts, ensuring that the benefits of AI are distributed fairly across society. ### Trust and Transparency: For AI to be widely accepted and integrated into critical sectors, it’s essential for these systems to be transparent and explainable. Regulation can promote standards for transparency and explainability in AI systems. ### International Competitiveness: As AI technology progresses globally, there’s a risk of a regulatory race to the bottom, where countries compete for AI innovation at the expense of ethical and safety standards. International regulations can help establish a level playing field. ### Avoiding Potential Harm: The long-term risks associated with advanced AI technologies, including potential existential risks, argue for proactive regulatory measures to ensure that AI development aligns with human values and safety requirements. However, regulating AI also poses challenges. It requires a delicate balance between promoting innovation and preventing harm, necessitating a nuanced understanding of the technology and its implications. Regulations need to be flexible enough to adapt to rapid advancements in AI technology while being robust enough to address the risks. The debate on AI regulation is ongoing, with some advocating for self-regulation by the tech industry, while others call for government intervention. The right approach likely lies somewhere in between, involving collaboration between governments, industry, and civil society to create a regulatory framework that encourages responsible AI development and use. |

Question asked: Most of these issues can be regulated by existing law. Why do we need new rules for AI?

Answers by 4 systems:

| Diplomacy.edu ChatGPT 3.5 | While existing laws may cover some issues related to AI, new rules are necessary for several reasons. First, AI systems can be incredibly complex and may not fit neatly into existing legal categories. Existing laws may not adequately address the unique risks and challenges posed by AI, such as issues related to bias, transparency, and accountability. Second, AI systems can have far-reaching impacts on society, including on human rights, safety, and security. New rules can help ensure that these impacts are taken into account and that appropriate safeguards are in place. Third, AI is a rapidly evolving field, and existing laws may not be able to keep pace with the latest developments. New rules can be designed to address emerging issues and to anticipate future challenges. Finally, existing laws may not be well-suited to the global nature of AI. New rules can be designed to promote international cooperation and to ensure that AI is developed and used in a way that benefits all of humanity, rather than just a few powerful actors. In summary, while existing laws may cover some issues related to AI, new rules are necessary to address the unique risks and challenges posed by AI, to ensure that the impacts of AI are taken into account, to keep pace with the latest developments in the field, and to promote international cooperation. |

| Ask Jovan | You make a fair point. Existing laws and regulations can potentially address many AI-related issues to some extent. However, AI presents some novel challenges that current laws may not adequately cover, such as governing the use of synthetic data, ensuring transparency in AI systems, and mitigating risks from potential AI monopolies. Tailored governance frameworks may be needed to keep up with the rapid pace of AI development and its wide-ranging impacts across sectors. But you’re right that we should aim to leverage and adapt existing laws where possible before creating entirely new AI-specific rules. |

| AI Governance Assistant | While existing laws may cover some issues related to AI, new rules are necessary for several reasons. First, AI systems can be incredibly complex and may not fit neatly into existing legal categories. Existing laws may not adequately address the unique risks and challenges posed by AI, such as issues related to bias, transparency, and accountability. Second, AI systems can have far-reaching impacts on society, including on human rights, safety, and security. New rules can help ensure that these impacts are taken into account and that appropriate safeguards are in place. Third, AI is a rapidly evolving field, and existing laws may not be able to keep pace with the latest developments. New rules can be designed to address emerging issues and to anticipate future challenges. Finally, existing laws may not be well-suited to the global nature of AI. New rules can be designed to promote international cooperation and to ensure that AI is developed and used in a way that benefits all of humanity, rather than just a few powerful actors. In summary, while existing laws may cover some issues related to AI, new rules are necessary to address the unique risks and challenges posed by AI, to ensure that the impacts of AI are taken into account, to keep pace with the latest developments in the field, and to promote international cooperation. |

| ChatGPT 4.0 (professional) | While it’s true that existing laws cover some aspects relevant to AI, such as data protection, privacy, and non-discrimination, the unique characteristics and challenges posed by AI technologies often go beyond the scope of current legal frameworks. Here are several reasons why new rules specifically tailored to AI might be necessary: ### Rapid Technological Advancement: AI technologies evolve at a pace that existing legal frameworks struggle to keep up with. New rules or amendments are often required to address novel issues that arise, ensuring the law remains relevant and effective. ### Complexity and Opacity: AI systems, especially those based on deep learning, can be highly complex and opaque, making it difficult to understand how decisions are made. This opacity challenges traditional legal concepts of accountability, liability, and transparency. New rules could set standards for explainability and accountability specific to AI technologies. ### Scale and Impact: The scale at which AI systems can operate and their potential societal impact are unprecedented. For instance, an AI system could influence millions of job prospects, loan applications, or medical treatments. Existing laws might not adequately address the scale of impact or provide sufficient safeguards against misuse or harm. ### Global Reach: AI technologies often operate across national borders, raising jurisdictional challenges. While existing international laws address some cross-border issues, the global nature of AI may necessitate new international agreements or frameworks to ensure consistent and fair regulation. ### Specific Risks and Ethical Concerns: AI introduces specific risks and ethical concerns that existing laws may not adequately prevent or mitigate, such as algorithmic bias, the automation of social manipulation, or the use of lethal autonomous weapons. New rules can specifically target these risks, establishing clear boundaries and ethical standards for AI development and use. ### Economic and Social Shifts: The potential for AI to disrupt job markets, exacerbate inequality, and alter social dynamics might require new forms of regulatory intervention to ensure that the benefits of AI are equitably distributed and that social safety nets are in place. ### Preemptive Measures: Given the potential long-term risks associated with more advanced AI systems, including scenarios where AI could pose significant threats to humanity, new rules might be needed to guide safe development paths and ensure that robust safety measures are implemented well in advance. Existing laws provide a foundation, but AI-specific regulation can offer a more focused and adaptable framework to address the nuanced challenges of AI. Such regulation would not necessarily replace existing laws but complement them, ensuring a comprehensive legal and ethical framework for the advancement and integration of AI into society. |